I think FIR designer and Crosslite+ are really good commercial software packages... beggars (like moi) can't be choosers, however!

Yeah, I hear you. If I hadn't got so tired of all the manual work/time it took with rePhase, on my 4 & 5 way DIY unity/synergy speakers doing each driver section-by-section,

I'd still be with "rePhase only" ....just for saving some $$.

It probably is worth noting though, for folks wanting a rather easy to use, but still very powerful FIR generator, Eclipse Audio has two versions both for less money than either Acourate or AudioLense.

Above those versions is gets pricey however, and I must admit I don't like the business model for those versions, which I feel creates a need for ongoing subscriptions.

Crosslite+ is more of a new toy for me than anything. It does some really cool stuff. Allows virtual speaker tuning, and can automatically locate both impulse peaks (like most all measurement software, as well as impulse initial rise (which I find killer helpful for IIR work).

Quasi-anechoic speaker measurements and FIR have become as big a part of the hobby as the actual speaker building.

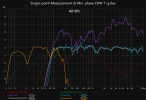

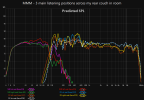

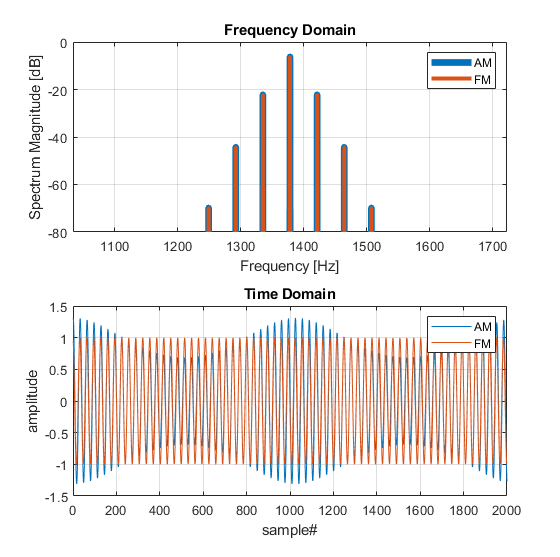

I am really convinced phase matters. Setting audibility aside, I think paying attention to it makes for more refined basic frequency response spinoramas.

And I personally think phase does have audible effects, more so in the lower frequency range. Hence, enjoy the comments of j.j. and others giving ways to test/experiment.

Anyway, probably wrong thread for my 2c on FIR generators....I'll get back to, and stick to, PHASE