dallasjustice

Major Contributor

There's been a lot of complaining lately about modern recordings. It seems to me the most legitimate of these are those complaints directed at severely clipped audio. Most audiophiles don't listen to this kind of music. I listen to plenty of rock, electronic, dance and metal. I know that much of this music is dynamically compressed and clipped.

It's been my argument that this type of music was meant to be heard at a loud level. However, I want to have the most enjoyable experience at home and still listen loud. So why not take matters in to my own hands? Instead of complaining, I did something about it!!

Perfect Declipper is a .VST plugin which reduces hard limiting distortions and increases dynamic range where needed.

I downloaded Perfect Declipper and installed it in Jriver.

http://www.perfectdeclipper.com/download/

Yes, I paid 100 euros. I know that's a lot of money for software. But I was hoping it would really improve my listening experience. So far it has. I think it works very well for almost all types of low DR/clipped music. If you listen to these type of recordings, I'd suggest you check this shit out.

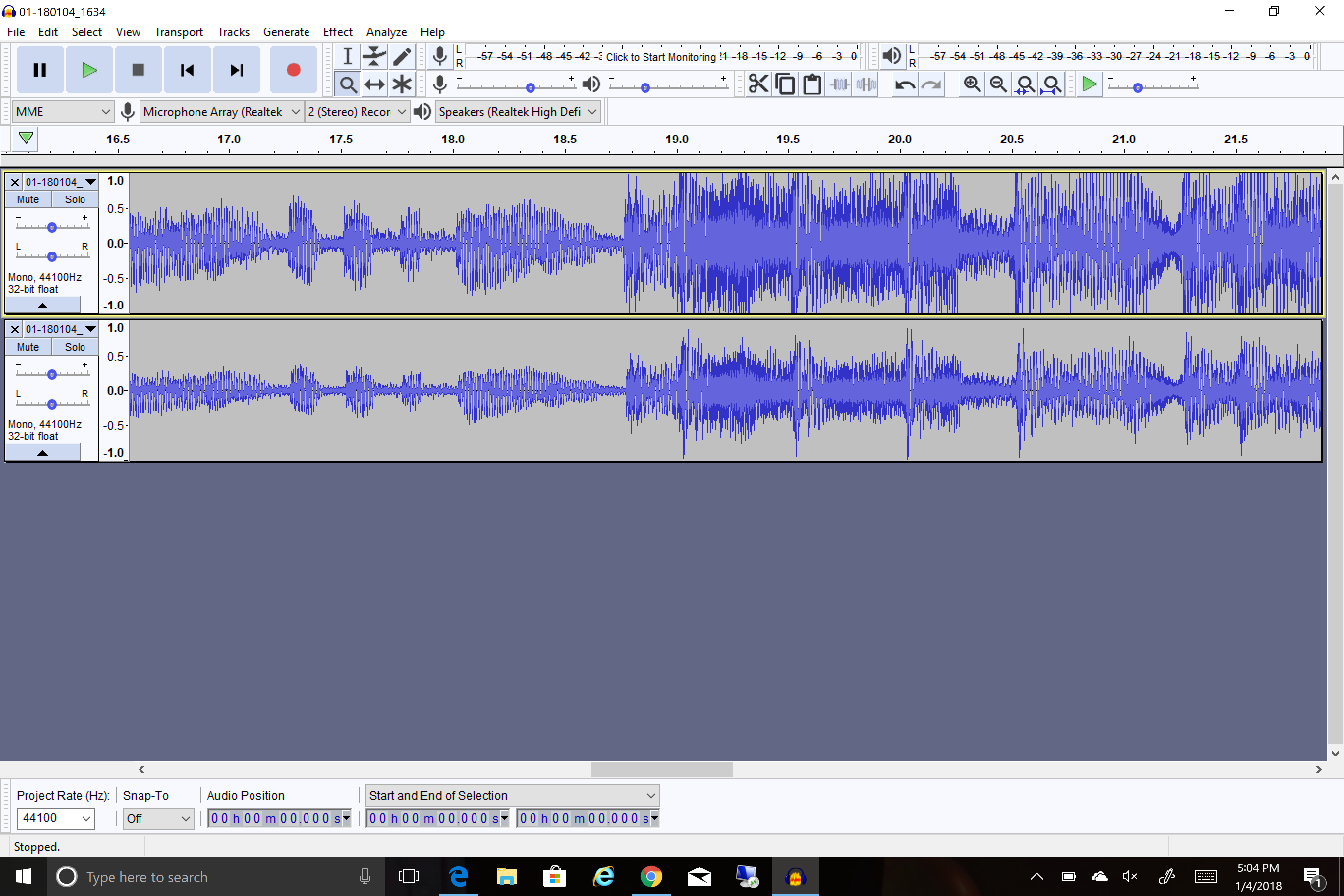

Btw, here's a comparison I did in Audacity. I first recorded these clips using Jriver to Reaper DAW over Lynx Hilo ASIO. The recording chain is all digital; no conversions to analog.

I lined up the same track (Awolnation’s “Bad Wolf”) using Audacity; one track was run through perfect declipper and the other was not. Below are the .wav files if you want to listen to the difference. Notice Perfect Declipper attenuates -6db. So, you need to adjust levels to really compare the two. I think you'll notice a nice improvement in a decent playback system.

https://www.dropbox.com/s/hbcu0njjvplth96/01-180104_1634.wav?dl=0

https://www.dropbox.com/s/reqzxhz0ccita72/01-180104_1636.wav?dl=0

It's been my argument that this type of music was meant to be heard at a loud level. However, I want to have the most enjoyable experience at home and still listen loud. So why not take matters in to my own hands? Instead of complaining, I did something about it!!

Perfect Declipper is a .VST plugin which reduces hard limiting distortions and increases dynamic range where needed.

I downloaded Perfect Declipper and installed it in Jriver.

http://www.perfectdeclipper.com/download/

Yes, I paid 100 euros. I know that's a lot of money for software. But I was hoping it would really improve my listening experience. So far it has. I think it works very well for almost all types of low DR/clipped music. If you listen to these type of recordings, I'd suggest you check this shit out.

Btw, here's a comparison I did in Audacity. I first recorded these clips using Jriver to Reaper DAW over Lynx Hilo ASIO. The recording chain is all digital; no conversions to analog.

I lined up the same track (Awolnation’s “Bad Wolf”) using Audacity; one track was run through perfect declipper and the other was not. Below are the .wav files if you want to listen to the difference. Notice Perfect Declipper attenuates -6db. So, you need to adjust levels to really compare the two. I think you'll notice a nice improvement in a decent playback system.

https://www.dropbox.com/s/hbcu0njjvplth96/01-180104_1634.wav?dl=0

https://www.dropbox.com/s/reqzxhz0ccita72/01-180104_1636.wav?dl=0

Last edited: